Retrieval-Augmented Generation (RAG)

Enhancing AI with Dynamic Knowledge Integration

Introduction

Retrieval-Augmented Generation (RAG) represents a transformative approach in natural language processing (NLP), merging the strengths of retrieval-based systems and generative AI models. By dynamically accessing external data during response generation, RAG addresses limitations of traditional models, offering more accurate, context-aware, and up-to-date answers. This article explores how RAG works, its applications, benefits, challenges, and future directions.

How RAG Works

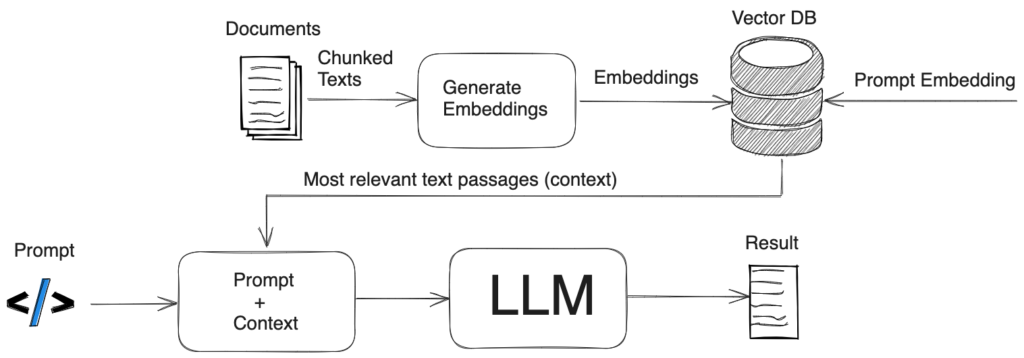

RAG operates through two key phases:

- Retrieval:

- When a query is received, the system converts it into a vector using embedding techniques.

- A search is conducted over a pre-defined knowledge base (e.g., documents, databases) to find the most relevant information using similarity metrics (e.g., cosine similarity).

- Tools like dense passage retrieval or FAISS (Facebook AI Similarity Search) optimize this process for speed and accuracy.

- Generation:

- The retrieved documents are fed into a generative model (e.g., BERT, GPT-3).

- The model synthesizes the external data with its pre-trained knowledge to produce a coherent, contextually relevant response.

This hybrid approach allows RAG to bypass the static knowledge limitations of traditional models, enabling real-time data integration.

Benefits of RAG

- Accuracy: Leverages verified external sources, reducing reliance on potentially outdated training data.

- Scalability: Adapts to new information without retraining the entire model.

- Domain Adaptability: Excels in specialized fields (e.g., healthcare, legal) by referencing domain-specific corpora.

- Transparency: Provides traceability by citing retrieved documents, enhancing user trust.

Example: A customer service chatbot using RAG can pull the latest product manuals to resolve user issues, ensuring responses align with current policies.

Applications of RAG

- Customer Support:

- Integrates FAQs, manuals, and ticket histories to deliver precise answers.

- Healthcare:

- Retrieves medical guidelines or research papers to assist in diagnosis or treatment recommendations.

- Academic Research:

- Provides summaries of recent studies by querying academic databases.

- Legal Compliance:

- Cross-references regulatory documents to ensure accurate legal advice.

Challenges and Limitations

- Retrieval Quality: Irrelevant documents lead to poor responses. Requires robust curation and indexing of the knowledge base.

- Latency: Combined retrieval and generation steps may slow real-time applications.

- Bias Propagation: Risks inheriting biases from the external data source.

- Maintenance Overhead: Regularly updating the knowledge base is resource-intensive.

Future Directions

- Efficient Retrieval:

- Advances in vector indexing (e.g., HNSW algorithms) to accelerate similarity searches.

- Interactive RAG:

- Allowing users to refine queries iteratively based on initial results.

- Bias Mitigation:

- Implementing fairness checks on retrieved content.

- Integration with Advanced Models:

- Pairing RAG with models like GPT-4 for richer contextual understanding.

Tools and Frameworks

- Haystack: An open-source framework for building end-to-end RAG pipelines.

- Hugging Face Transformers: Supports RAG integration with pre-trained models.

- FAIR’s RAG Model: The original implementation by Facebook AI Research, combining DPR (Dense Passage Retrieval) with seq2seq models.

Ethical Considerations

- Ensure external data sources are credible and unbiased.

- Audit systems regularly to prevent misinformation or harmful content propagation.

Conclusion

RAG bridges the gap between static generative models and dynamic real-world data, offering a scalable solution for accurate, context-driven AI. While challenges like latency and bias persist, ongoing advancements in retrieval efficiency and model integration promise to expand its impact across industries. By thoughtfully implementing RAG, developers can create systems that not only answer questions but do so with the depth and reliability that modern applications demand.

Next Steps:

- Experiment with RAG using platforms like Haystack.

- Explore domain-specific knowledge bases to tailor solutions.

- Stay updated on hybrid architectures combining RAG with reinforcement learning or multimodal inputs.

In the evolving landscape of AI, RAG stands out as a pivotal innovation, transforming how machines understand and interact with the world.